The Rise and Impact of Large Language Models

Exploring the Capabilities, Challenges, and Future of AI's Linguistic Prowess

Table of Contents

Introduction to Large Language Models (LLMs)

Definition and basic concept of LLMs

Brief history and evolution of LLMs

Importance and relevance in today’s technology landscape

Core Principles and Technologies

Overview of Artificial Intelligence and Machine Learning

Understanding Neural Networks

Deep Learning in the context of LLMs

Natural Language Processing (NLP)

Architecture of Large Language Models

Explanation of key components (like Transformers)

Layers and their functions in LLMs

Role of Attention Mechanisms

Data processing and model training methodologies

Training Large Language Models

Data collection and preprocessing

Supervised vs. unsupervised learning approaches

Challenges in training (like computational requirements, data quality and bias)

Fine-tuning models for specific tasks or languages

Applications of Large Language Models

Natural Language Understanding (NLU) and Generation (NLG)

Use in chatbots and virtual assistants

Applications in content creation, summarization, and translation

Ethical considerations and responsible AI use

Challenges and Limitations

Understanding biases in LLMs

Ethical concerns and societal impact

Computational and environmental cost

Limitations in understanding context and nuance

Future of Large Language Models

Advances in model architectures and algorithms

The role of LLMs in shaping future technologies

Integration with other AI technologies

Ethical and regulatory considerations for future development

Conclusion

Summarizing the state of LLMs today

Potential future developments and impacts

Final thoughts on the responsible use and evolution of LLMs

Introduction to Large Language Models (LLMs)

1. Definition and Basic Concept of LLMs

Large Language Models (LLMs) are advanced machine learning models designed to understand, interpret, and generate human language. They are a subset of artificial intelligence that specifically deal with processing and analyzing large volumes of natural language data. By leveraging vast amounts of text, these models learn the nuances, grammar, and idiosyncrasies of language, enabling them to perform a wide range of language-based tasks.

2. Brief History and Evolution of LLMs

The development of LLMs has been a progressive journey. Early models like ELIZA and PARRY in the 1960s and 1970s were primitive and followed simple rule-based methods. The field saw significant advancements with the introduction of statistical methods in the 1980s and 1990s. The last decade, however, has been transformative with the advent of deep learning techniques. Models like GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) have revolutionized the field, offering unprecedented language understanding and generation capabilities.

3. Importance and Relevance in Today’s Technology Landscape

LLMs have become integral in today's technology landscape. They are the backbone of numerous applications we encounter daily, from search engines and virtual assistants to advanced translation services and content creation tools. Their ability to process and generate human-like text has opened new frontiers in human-computer interaction, making technology more accessible and intuitive. As these models continue to evolve, their impact is expanding into areas like healthcare, law, and education, offering potential solutions to complex problems and enhancing human productivity and creativity.

This introduction sets the stage for a deeper exploration of how LLMs function, their architecture, applications, and the challenges they present, laying the foundation for understanding one of the most influential technologies in the modern era.

Core Principles and Technologies Behind Large Language Models

1. Overview of Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) is a broad field focused on creating machines capable of performing tasks that typically require human intelligence. Machine Learning (ML), a subset of AI, involves the development of algorithms that enable computers to learn and make decisions based on data. LLMs are a product of advancements in both AI and ML, utilizing complex algorithms to process and understand language.

2. Understanding Neural Networks

At the heart of LLMs are neural networks, inspired by the human brain's structure and function. These networks consist of layers of interconnected nodes, or "neurons," which process and transmit information. Each neuron's output is determined by the weighted sum of its inputs, allowing the network to learn intricate patterns through training.

3. Deep Learning in the Context of LLMs

Deep learning, a subset of ML, involves training these neural networks with many layers (hence "deep") to learn from vast amounts of data. In the context of LLMs, deep learning enables the model to understand and generate language by learning from a large corpus of text data, recognizing patterns in how words and phrases are used.

4. Natural Language Processing (NLP)

NLP is a field at the intersection of computer science, AI, and linguistics, focused on enabling computers to understand and process human language. LLMs are a significant advancement in NLP, as they can comprehend context, sarcasm, and even nuances in language, which were challenging for earlier models.

By understanding these core principles and technologies, one gains insight into the foundational elements that make LLMs so powerful and versatile in processing and generating human language. This understanding is crucial for appreciating the complexity and capabilities of these models, which will be further explored in the subsequent sections of the deep dive.

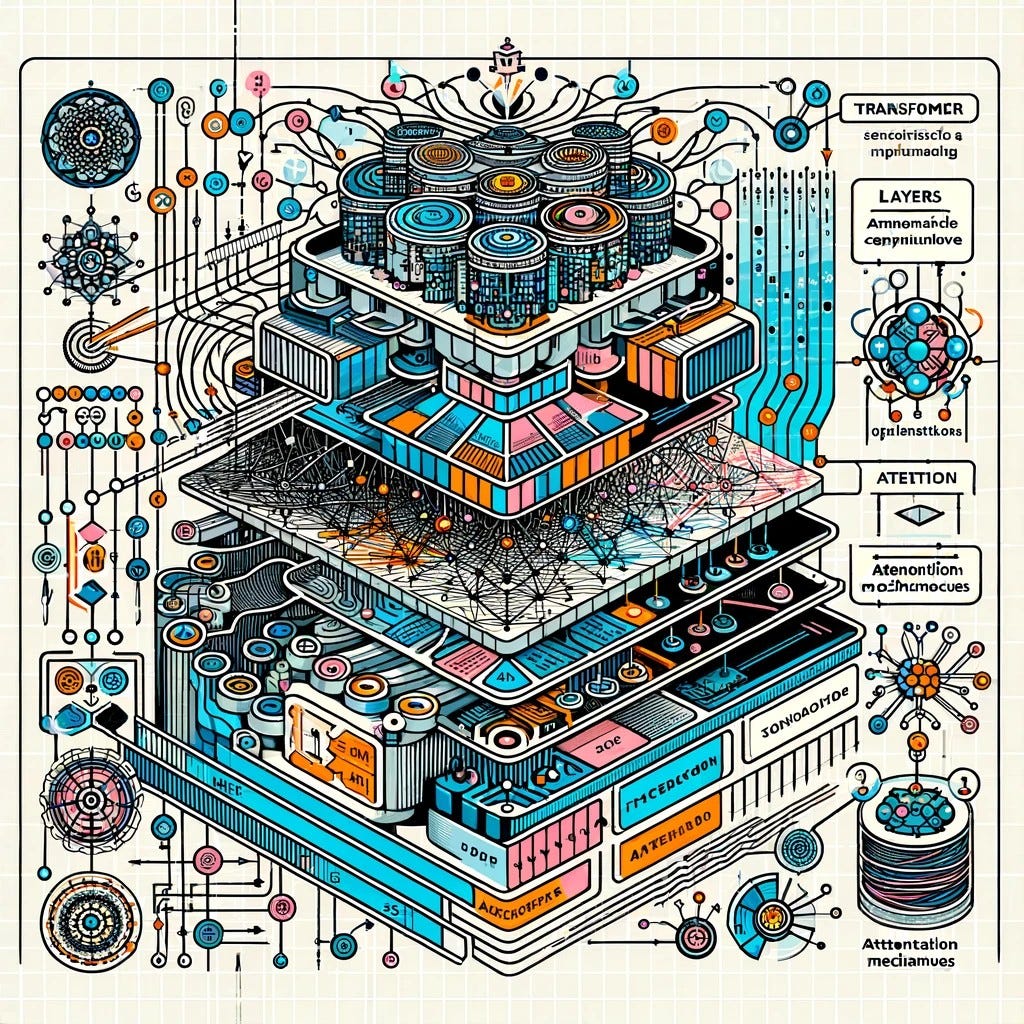

Architecture of Large Language Models

1. Explanation of Key Components (like Transformers)

The architecture of Large Language Models (LLMs) is often based on the transformer model, a breakthrough in neural network design. Transformers use self-attention mechanisms to process words in relation to all other words in a sentence, contrary to earlier models which processed words in sequential order. This allows LLMs to understand context and relationships between words more effectively.

2. Layers and Their Functions in LLMs

LLMs typically consist of multiple layers, each performing specific functions. These include:

Input Layer: Processes the input text into a format understandable by the model.

Hidden Layers: Multiple layers where the actual processing and learning occur. These layers contain neurons that apply transformations to the data, capturing complex patterns and relationships.

Output Layer: Produces the final output, which can be a text generation, classification, or prediction based on the task.

3. Role of Attention Mechanisms

Attention mechanisms in LLMs allow the model to focus on different parts of the input text when performing a task. This mimics how human attention works, focusing on relevant parts of the input to make decisions. In LLMs, this is crucial for understanding context and meaning, especially in longer texts.

4. Data Processing and Model Training Methodologies

Data processing involves converting large datasets of text into a format suitable for training the LLM. This typically involves tokenization (breaking text into smaller units like words or characters) and normalization (standardizing text data). Training involves feeding this processed data into the model, allowing it to learn and adjust its internal parameters. This is often done using vast datasets and requires significant computational power.

Understanding the architecture of LLMs is essential to comprehend how they process and generate language with such high efficiency and accuracy. This knowledge sets the stage for exploring how these models are trained, their applications, and the challenges they present.

Training Large Language Models

1. Data Collection and Preprocessing

Training a Large Language Model (LLM) starts with collecting a vast and diverse dataset. This dataset typically consists of text from various sources like books, websites, and articles to ensure a wide range of language styles and topics. Preprocessing this data is crucial and involves cleaning (removing irrelevant or sensitive information), tokenizing (splitting text into smaller units like words or characters), and normalizing (standardizing text format).

2. Supervised vs. Unsupervised Learning Approaches

LLMs can be trained using either supervised or unsupervised learning methods:

Supervised Learning: Involves training the model on labeled data, where the desired output is known. For example, a task might involve predicting the next word in a sentence where the correct next word is provided during training.

Unsupervised Learning: Focuses on training with unlabeled data. The model tries to understand patterns and structures in the data without explicit instructions. An example is training a model to generate coherent text based on input it receives without pre-defined answers.

3. Challenges in Training

Training LLMs presents several challenges:

Computational Requirements: These models require immense computational power and resources, often needing specialized hardware like GPUs or TPUs.

Data Quality and Bias: The quality of training data significantly affects the model's performance. Biased or poor-quality data can lead to biased or inaccurate outputs.

Overfitting and Generalization: Ensuring that the model generalizes well to new, unseen data, while not overfitting to the training data, is a delicate balance.

4. Fine-tuning Models for Specific Tasks or Languages

Once an LLM is trained, it can be fine-tuned for specific tasks (like question answering, translation, or summarization) or specific languages. Fine-tuning involves additional training on a smaller, task-specific dataset, allowing the model to adapt its learned patterns to perform specific functions or understand specific language nuances.

The process of training LLMs is complex and resource-intensive, but it is crucial for developing models that can understand and generate human language with high accuracy and versatility. This understanding of training processes and challenges is essential for appreciating the capabilities and limitations of LLMs.

Applications of Large Language Models

1. Natural Language Understanding (NLU) and Generation (NLG)

Large Language Models (LLMs) are pivotal in advancing Natural Language Understanding (NLU) and Natural Language Generation (NLG). In NLU, they interpret, comprehend, and derive meaning from human language, enabling them to answer questions, summarize texts, or provide recommendations. NLG allows these models to generate coherent, contextually relevant, and often creative text, making them capable of writing articles, composing poetry, or even scripting code.

2. Use in Chatbots and Virtual Assistants

LLMs have significantly enhanced the capabilities of chatbots and virtual assistants. By understanding and generating natural language, they can engage in more meaningful, context-aware conversations with users, providing assistance, answering queries, and even simulating social interaction. This has wide applications in customer service, personal assistants, and interactive entertainment.

3. Applications in Content Creation, Summarization, and Translation

In content creation, LLMs assist in generating creative writing, marketing copy, and journalistic content. They are also used in summarizing long documents, extracting key points and presenting them in a concise manner. Additionally, their advanced understanding of language nuances has improved machine translation services, bridging language barriers more effectively.

4. Ethical Considerations and Responsible AI Use

With these applications come ethical considerations. Issues such as data privacy, potential misuse, and the propagation of biases present challenges. Ensuring responsible AI use involves transparency in how these models are trained and used, understanding their limitations, and continually working to mitigate biases and ensure fairness.

The applications of LLMs are vast and growing, impacting various sectors from business to creative arts. Understanding these applications helps to appreciate the potential of LLMs while also acknowledging the need for responsible and ethical AI development and deployment.

Challenges and Limitations of Large Language Models

1. Understanding Biases in LLMs

One of the most significant challenges in LLMs is their inherent biases, which stem from the training data. Since these models learn from existing human-generated texts, they can inadvertently learn and perpetuate societal biases present in these texts. This can lead to biased or discriminatory outputs in certain contexts, raising concerns about fairness and equality in AI applications.

2. Ethical Concerns and Societal Impact

LLMs raise various ethical concerns. The potential for misuse in generating misleading information or deepfakes is a significant worry. There's also concern about their impact on jobs, particularly in fields like writing and customer service. The need for ethical guidelines and regulations in the development and deployment of these technologies is increasingly recognized.

3. Computational and Environmental Cost

Training and running LLMs require substantial computational resources, leading to high energy consumption. This has environmental implications, particularly in terms of carbon footprint. Balancing the benefits of advanced AI models with their environmental impact is a growing concern.

4. Limitations in Understanding Context and Nuance

Despite their advanced capabilities, LLMs still struggle with understanding context and nuance, especially in complex or ambiguous situations. They lack real-world experience and common sense reasoning, which can lead to errors or nonsensical outputs. This limitation underscores the importance of human oversight in their application.

These challenges and limitations highlight that while LLMs are powerful tools, they are not without their flaws. Addressing these issues is crucial for the responsible development and use of these technologies, ensuring they are beneficial and do not perpetuate harm or inequality.

Future of Large Language Models

1. Advances in Model Architectures and Algorithms

The future of Large Language Models (LLMs) will likely see continuous advancements in model architectures and learning algorithms. Researchers are working on developing more efficient, less resource-intensive models that can process language more effectively. Innovations may include better understanding of context and the ability to reason more like humans. There is also a trend towards more generalized models capable of performing a wider range of tasks.

2. The Role of LLMs in Shaping Future Technologies

LLMs are poised to play a significant role in shaping future technologies. Their ability to understand and generate human language could revolutionize how we interact with machines, making technology more accessible and intuitive. They are expected to find applications in more fields, from enhancing educational tools to aiding in complex scientific research.

3. Integration with Other AI Technologies

An exciting prospect is the integration of LLMs with other AI technologies. Combining LLMs with advancements in fields like computer vision and robotics could lead to more sophisticated AI systems. For example, robots with advanced language understanding capabilities could transform industries like healthcare and manufacturing.

4. Ethical and Regulatory Considerations for Future Development

As LLMs become more ingrained in various aspects of life, ethical and regulatory considerations will become increasingly important. This includes addressing biases in AI, ensuring data privacy, and managing the potential displacement of jobs. Establishing global standards and frameworks for the ethical development and deployment of these technologies will be crucial.

The future of LLMs is bright and filled with potential. As these models become more advanced and integrated into different technologies and sectors, they hold the promise of significant benefits. However, navigating their development responsibly to maximize their positive impact while minimizing potential harms remains a key challenge.

Conclusion

Summarizing the State of LLMs Today

Large Language Models (LLMs) have reached an unprecedented level of sophistication, profoundly impacting various sectors including technology, communication, and content creation. Their ability to understand and generate human language has opened up new possibilities for human-computer interaction, making technology more accessible and intuitive. Today, LLMs are at the forefront of artificial intelligence research and application, demonstrating remarkable capabilities in tasks ranging from simple text generation to complex problem-solving.

Potential Future Developments and Impacts

The future of LLMs holds immense potential. Continued advancements in AI and machine learning promise even more powerful and efficient models. We can expect LLMs to become more integrated into our daily lives, reshaping how we interact with technology and each other. The potential for these models to aid in education, healthcare, and other critical sectors is vast, offering opportunities for significant societal benefits.

Final Thoughts on the Responsible Use and Evolution of LLMs

As we embrace the capabilities of LLMs, it is imperative to approach their development and application with a sense of responsibility. Addressing ethical concerns, mitigating biases, and ensuring fair and equitable use of these technologies are crucial challenges that need to be addressed. The development of LLMs should be guided by a commitment to benefiting society while respecting privacy, security, and ethical standards.

In conclusion, while LLMs present exciting possibilities, their responsible development and use will determine their impact on society. Balancing innovation with ethical considerations is key to harnessing the full potential of these remarkable tools in a way that benefits all.